Importance of Efficient Notifications in Terraform & IaC Automation

By Yangci Ou

Table of Contents

- Introduction

- The Silent Failure Problem in IaC Deployments

- Notifications

- Alert Fatigue

- Targeted Deployment Notifications

- Spacelift’s Infrastructure as Code Terraform CI/CD Automation

- Open Sourcing our Spacelift Notification Policy Template

- Conclusion

Introduction

In the world of Terraform and OpenTofu (collectively referred to as “TF” in this post) and Infrastructure as Code (IaC), there are times where the CI/CD pipeline or automation fails to deploy IaC changes. Failures could occur due to the code errors, cloud provider resource constraints, authentication issues, state & locking conflict, transient provider or network disruptions.

Because there are so many factors involved in cloud infrastructure, there’s more possibility for failed TF applies – such failures are acceptable and can easily be addressed with simple follow-up changes or reruns.

But do you know what’s way worse than a failed TF deployment? A deployment failure that doesn’t get noticed for days.

In this article, we’ll talk through this problem and share how we believe it should be handled. We’ll include specifics on how we do this today with Spacelift for our clients.

The Silent Failure Problem in IaC Deployments

When infrastructure deployments fail, they often do so quietly. They can go unnoticed if there is no alerting set up – particularly in those projects still relying on manual TF executions from local machines rather than in an IaC automation pipeline, where the failure remains on that individual’s machine and is invisible to others. It leaves the root modules’ TF changes and modifications in an unapplied or failed state, until another change/TF operation reveals them. Without a centralized alerting system, there’s no guarantee it will be properly addressed or even communicated, delaying resolutions.

This is especially true in large IaC codebases or large TF monorepos where there are hundreds of root modules. The automation system dutifully reports the failure, but the failure slips through the cracks because of many apply operations running concurrently due to the sheer number of root modules.

At Masterpoint, we’ve observed this problem repeatedly across many of our clients when performing infrastructure audits, implementations, and ongoing managed service provider (MSP) relationships. As an organizations’ infrastructure scales and complexity grows, the likelihood of these silent failures increase which undermines infrastructure reliability.

When TF failures go unnoticed, potential problems can occur and cascade into significant issues such as:

- Infrastructure drift - Your actual infrastructure diverges from your desired state in the IaC, which is never good.

- Loss of source of truth - Your VCS/Git repository no longer accurately reflects your deployed infrastructure.

- Development roadblocks - Subsequent changes become blocked because new deployments can’t proceed until the failure is resolved.

- Increased troubleshooting complexity - The longer a failure is unnoticed, the harder it becomes to troubleshoot it, since you have outdated state data and have lost context about what changed in the first place.

- Wasted engineering time - Developers waste time days after the failure investigating issues that could’ve been more easily diagnosed on the spot.

Notifications

When teams first encounter the silent failure problem in IaC deployments, the common initial solution is straightforward: set up basic notifications. In the automation pipeline, on failures, it would capture the error and send a Slack/Microsoft Teams message into the corresponding channel for the team responsible for the TF code.

Notifications bring awareness of problems as early as possible for someone to look into and address. Without such notifications, failures are invisible until they cascade into larger problems.

Alert Fatigue

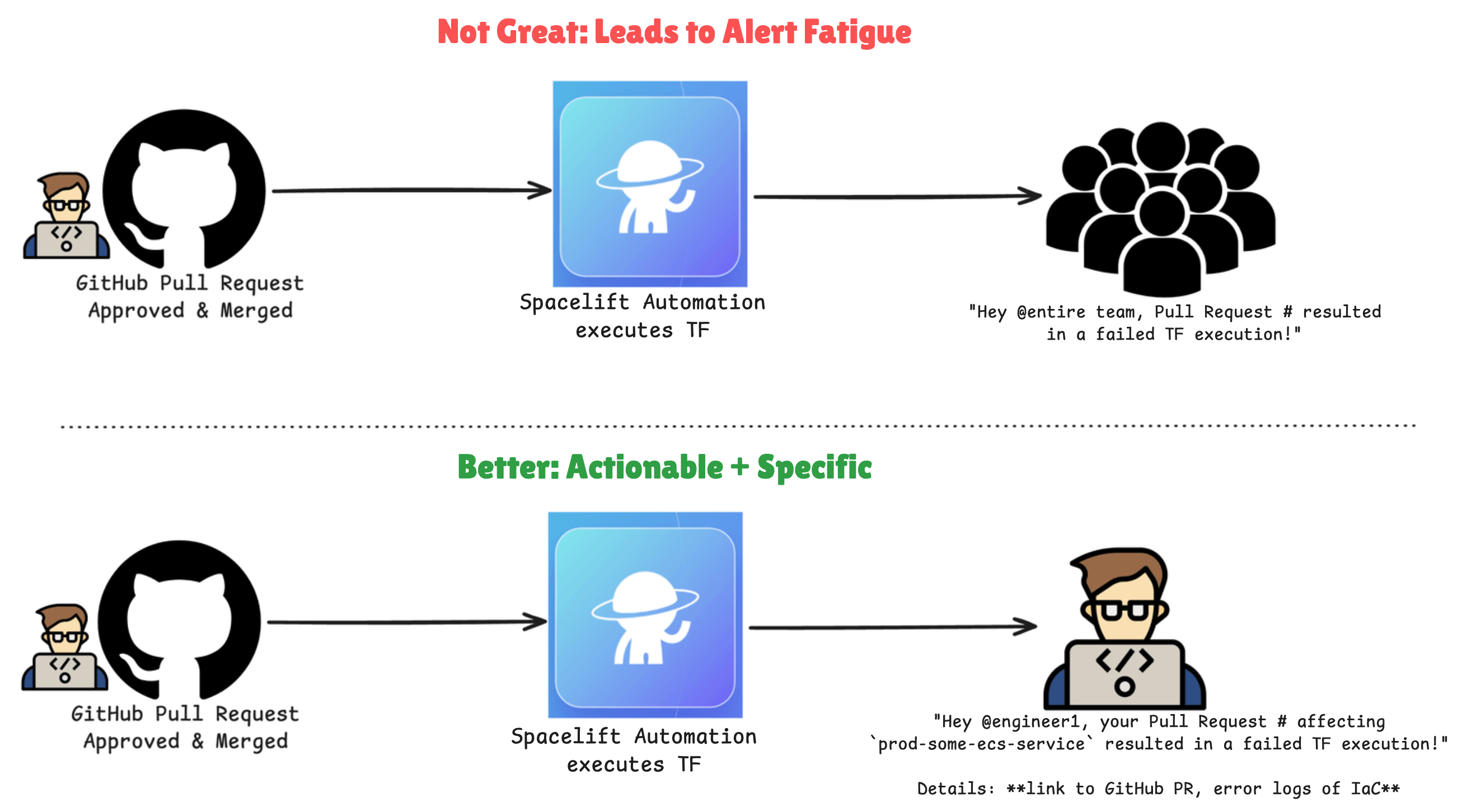

But often solutions create their own problems. When in a large infrastructure team environment with hundreds of root modules and components, another problem comes up with notifications: alert fatigue. Alert fatigue isn’t unique to TF infrastructure automation – it’s a universal challenge that affects all alerting and notification systems.

When every TF Apply that fails pings everyone in the channel with an universal tag such as @everyone or @here, notifications quickly become background noise. Teams become desensitized to constant alerts and critical failures (for example, load balancer modifications failing and affecting traffic routing) might get drowned out with minor issues.

This is particularly problematic in organizations with large cloud infrastructure footprints managed by IaC – notifications are usually important to someone, but when everyone on the team gets every notification, they are noticed by none.

Targeted Deployment Notifications

When we encountered alert fatigue and saw teams getting bogged down with too many notifications that might not be relevant to the appropriate engineer, we understood that we needed to make our notifications and alerts both precise and actionable. The approach of broadcasting all failures to an entire global channel was clearly ineffective. The notifications should tie the TF failed apply to the specific team. More specifically, where appropriate, the notifications should be tied to the engineer who made the code change. They are most likely the person best suited for handling the issue and has the most context.

Notifications through Spacelift’s IaC Automation

We frequently use and champion Spacelift as the TF automation platform for managing the IaC of our clients. One of the big features that we love in Spacelift, is their deep integration and belief in “policy-as-code”. We use this to express and execute well-defined policies at various decision points in the IaC management workflow using the OPA Rego policy language.

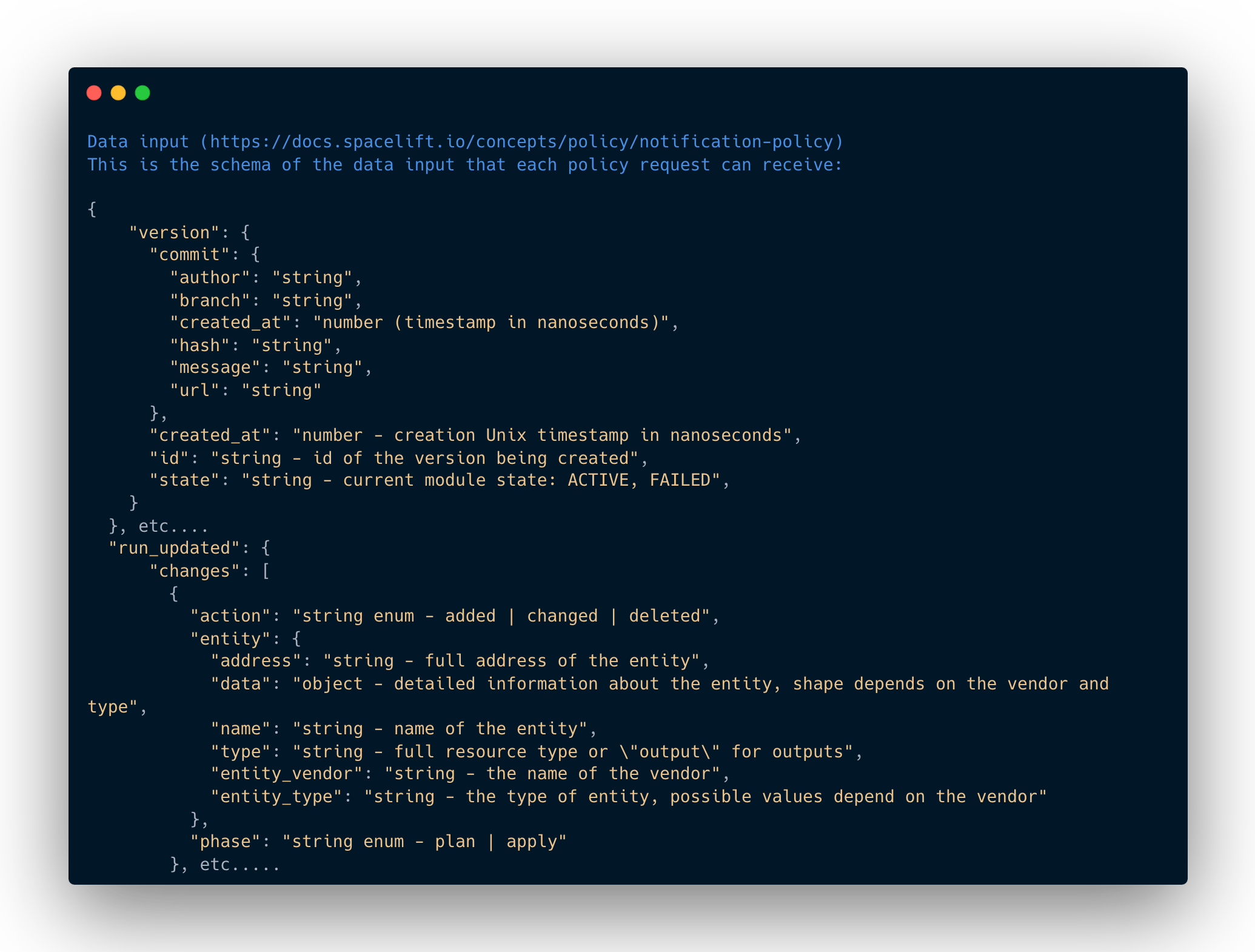

What makes Spacelift’s policy engine shine for notification use cases is its ability to access detailed information about the TF apply that just occurred, including:

- who triggered it,

- timestamps,

- the type of TF operation (add, modify, destroy),

- which TF resources are affected,

- the VCS (such as Git) context,

- state of the TF execution,

- and more.

This detailed information is passed in as a JSON data input, containing comprehensive details about each step in the TF automation pipeline. It provides everything needed for precise alerting.

From those details, we can evaluate conditions and send out notifications with OPA policies. This enables us to easily send the right notification at the right time to the right designated team member(s). These notifications are also turnkey integrated with Slack, Microsoft Teams, GitHub Pull Requests, and other downstream systems.

The beauty of this approach is that we didn’t need to build any external systems or complex integrations. The data and notification delivery is handled directly within Spacelift’s policy engine, making the solution both robust and easy to maintain.

Our improved solution using Spacelift’s Notification Policies does the following:

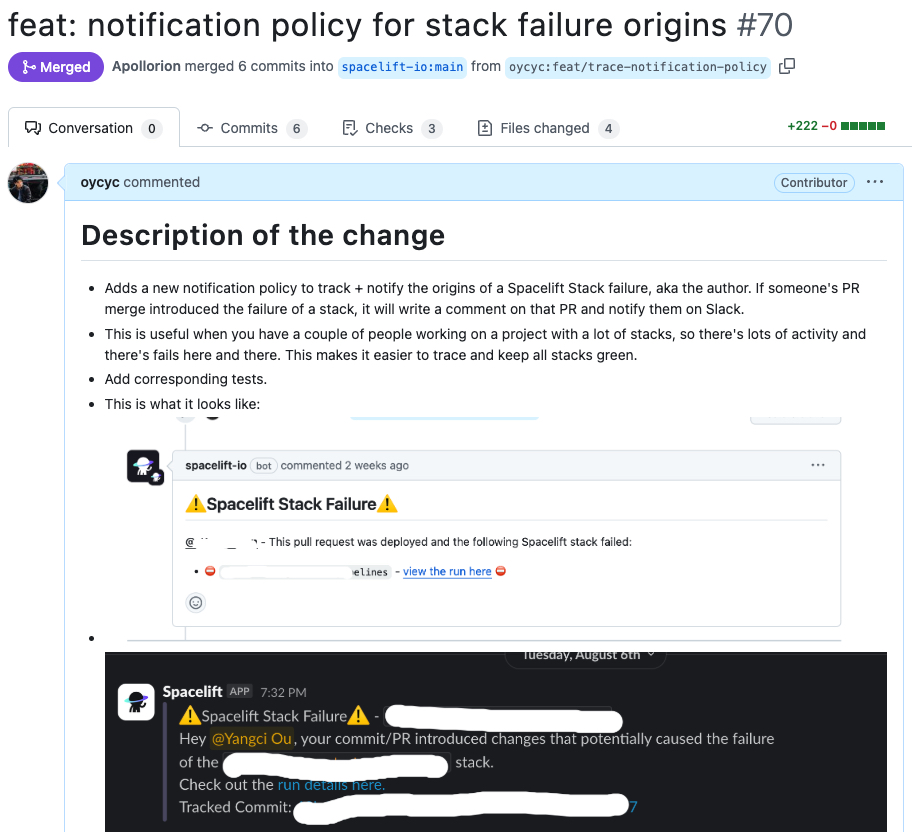

- Directly targets the responsible Pull Request. The new alerts now directly ping & tag the author of the commit that introduced and triggered the failed deployment.

- It’s important to note that this is NOT blaming the author, especially since TF Apply errors can be common. Rather, this is proactive, ensuring that the failure notification reaches the person most capable of resolving the issue efficiently.

- Provides rich contextual information by including links to the failed run and the specific code that caused it, as well as additional relevant information from the Spacelift policy data inputs mentioned above, such as identifying who the change is associated with.

- Implements deduplication so repeated issues such as identical root module failures don’t create a flood of redundant notifications. This is especially important on GitHub Pull Requests where notifications (comments in the GitHub PR context) can be updated in-place.

- Uses multiple notification channels for different failure priority levels. Critical resource issues (e.g. production related infrastructure deployments) deserve different treatment than minor sandbox environment failures.

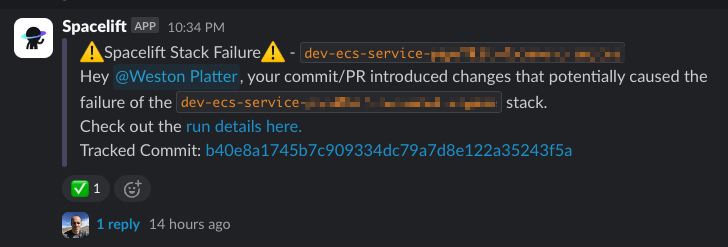

Below is a simple targeted notification example – with different use cases in different organizations, the actual messaging and content will vary greatly to suit what different teams require to best address their needs.

This new implementation of IaC failure alerting using Spacelift Notification Policies has resolved many pain points for us and our clients. It has significant benefits including faster resolution times, increased accountability, and decreased alert fatigue, which all contribute to less infrastructure drift and more reliable operational stability.

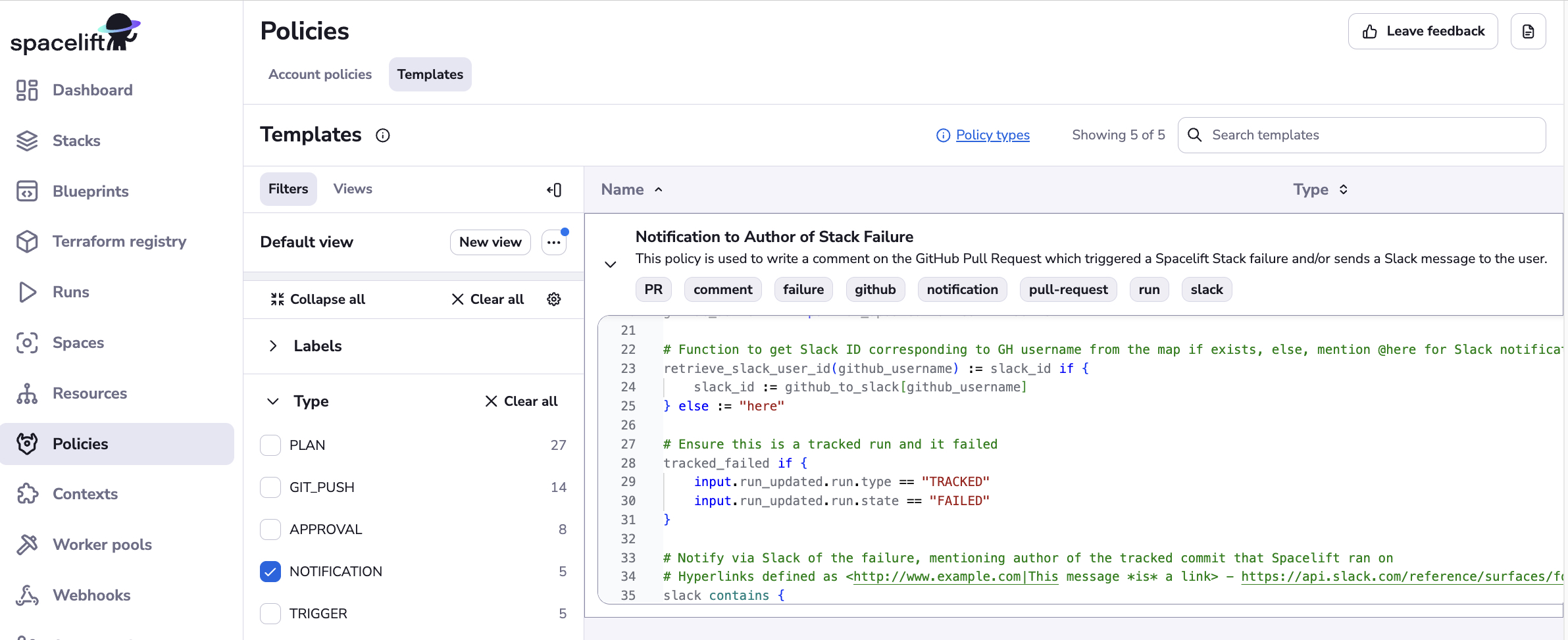

Open Sourcing our Spacelift Notification Policy Template

After seeing the tremendous benefits this custom notification policy brought to different teams, we shared our solution with the broader Spacelift community by extracting our Rego policy implementation into a template and open sourced the code by contributing it to Spacelift’s Policy Template Library. Developers using the Spacelift platform can import the policy to use for their system or repurpose it and tailor it to their specific organization’s needs.

You can view this policy on Spacelift’s Policy Template Library here or on Spacelift’s GitHub repository.

Conclusion

In the end, IaC automation failure notifications aren’t just a nice-to-have, they’re essential. This is especially true for teams working with TF and IaC at scale. Effective notifications aren’t about making sure everyone knows about every failure – they’re about making sure each failure notice goes to the right person(s) who can resolve the issue.

By implementing this tailored notification approach, we’ve transformed TF Apply failure notifications from an ignored annoyance to a valuable tool for operational stability. This proactive strategy keeps our IaC workflow streamlined, and prevents failures from snowballing into larger issues.

P.S. Interested in exploring more about Spacelift for managing Infrastructure as Code? We recently published a case study about migrating over 43,000 resources from Terraform Cloud to Spacelift!

👋 If you're ready to take your infrastructure to the next level, we're here to help. We love to work together with engineering teams to help them build well-documented, scalable, automated IaC that make their jobs easier. Get in touch!